Bad Bots Everywhere, Prophaze Protects Every Session

- On-prem / hybrid control

- Protect hidden APIs

- Block automated fraud

- Adaptive attacks throttling

- 15-min Zero-code setup

- Geo-based blocking & intelligence

Bots Are Everywhere,

Traditional Controls Can’t Tell Them Apart

Malicious automation now makes up a massive share of internet traffic, eroding revenue, inflating infrastructure costs, and overwhelming security teams while still slipping past basic rate limits and CAPTCHAs. Without dedicated bot management, scrapers, credential stuffers, and fraud bots quietly exploit web apps and APIs long before fraud tools or analytics flag the damage.

Scraping engines clone pricing, content, and inventory, slow sites, and undercut margins across channels.

Credential‑stuffing bots weaponize leaked passwords to take over accounts at scale.

Volumetric and low‑and‑slow bot traffic bloats infrastructure bills and risks outages at peak times.

Fraud bots automate fake signups, card testing, and checkout abuse faster than manual teams can respond.

Rising Bot Attacks,

Our BotCry™ Engine Delivery Multi-Layered Defense

Signature‑driven detection that fingerprints headers, devices, and IPs to quickly block known bad bots.

Smart, low‑friction challenges (JavaScript, cookies, invisible checks) that only step in when behavior looks risky.

Behavioral analytics that separate humans from automation using interaction patterns and behavioral biometrics.

Adaptive rate limiting that automatically throttles high‑frequency bots, scrapers, and brute‑force attacks in real time.

Centralized bot analytics and reporting so security, fraud, and business teams see impact in one place

How Prophaze BotCry™

Engine Shuts Down Bots in Real-Time

Every request is scored in milliseconds across multiple detection layers, so even human‑like, evasive bots are exposed and stopped before they touch critical flows. BotCry™ pairs this deep inspection with smart mitigation actions that protect logins, checkouts, and APIs without slowing real users or overloading your infrastructure.

Signature intelligence

Correlates headers, devices, and IPs with known bot fingerprints to instantly block commodity and reused tooling.

Behavioral analytics

Tracks navigation, mouse movement, scrolling, and typing cadence to separate real humans from scripted automation.

Good‑bot allowlisting

Identifies and preserves essential bots—search, monitoring, partners—so protection never breaks business‑critical automation.

Prophaze

Bot Protction

Smart challenges

Uses JavaScript checks, cookie tests, and invisible challenges so only suspicious traffic ever sees friction.

API endpoint monitoring

Baselines traffic to public, internal, and shadow APIs and flags anomalous patterns before bots can exploit them.

Geo visibility & blocking

Maps bot traffic by country, ASN, and region so you can instantly clamp down on high‑risk geos without touching legitimate markets.

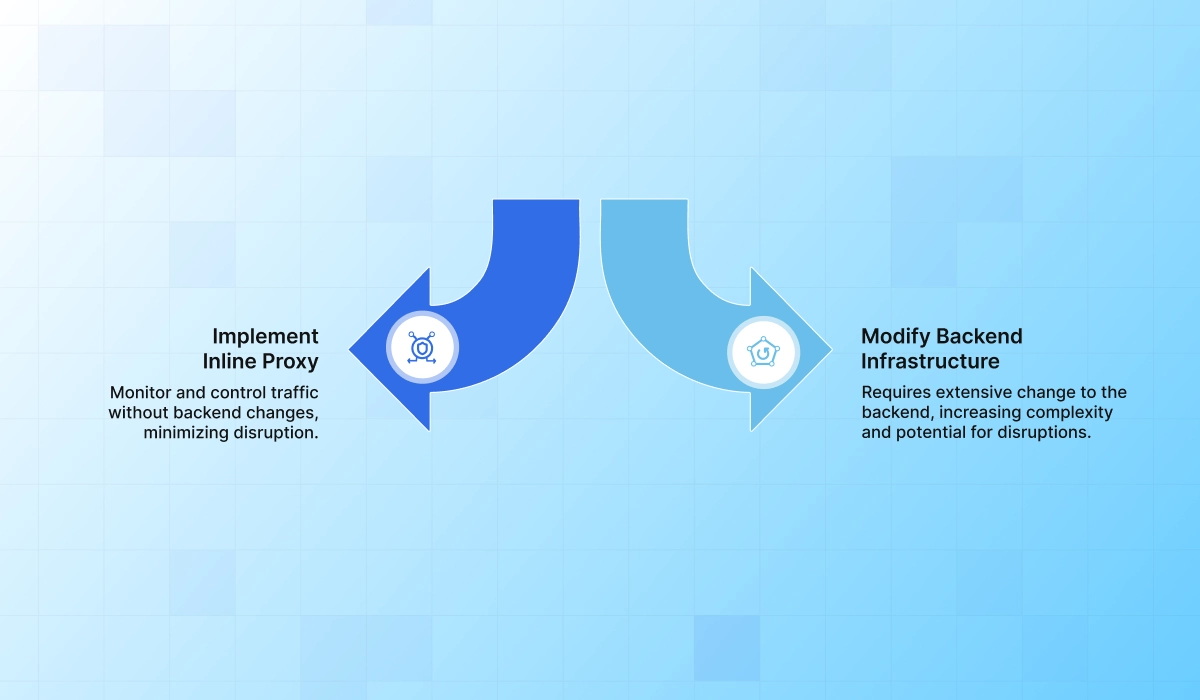

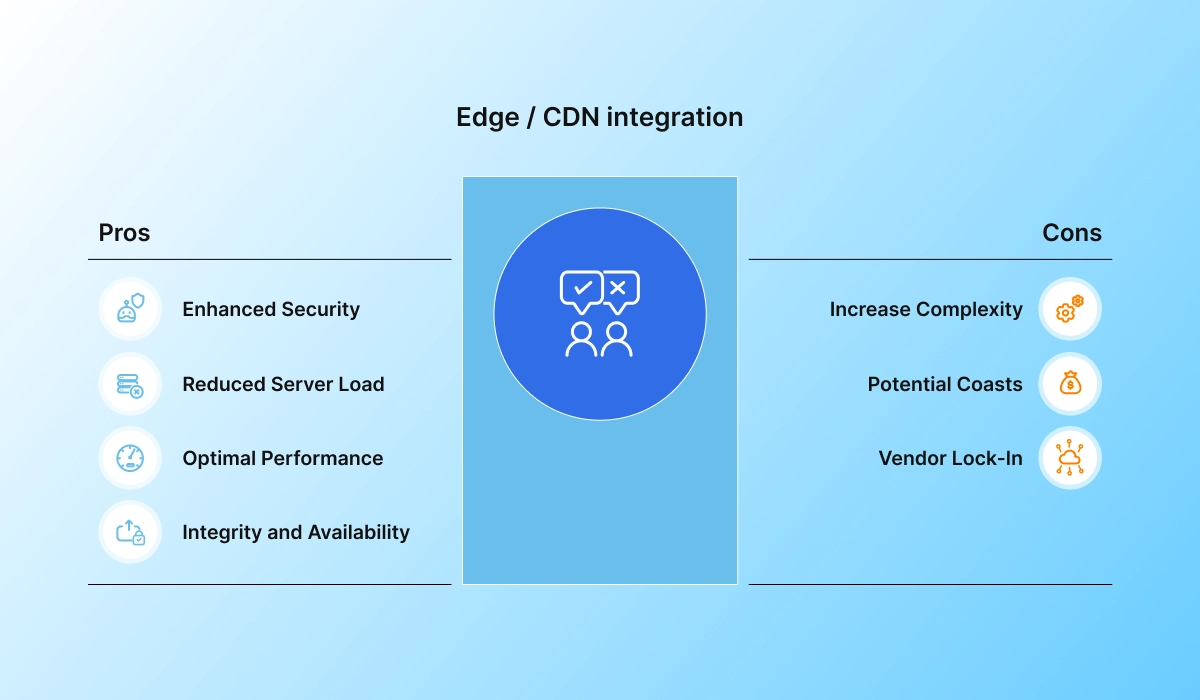

Bot Protection That Fits Your Architecture

Prophaze deploys seamlessly across modern and legacy environments without disrupting workflows.

From Invisible Bot Abuse to

Full Control—in Three Moves

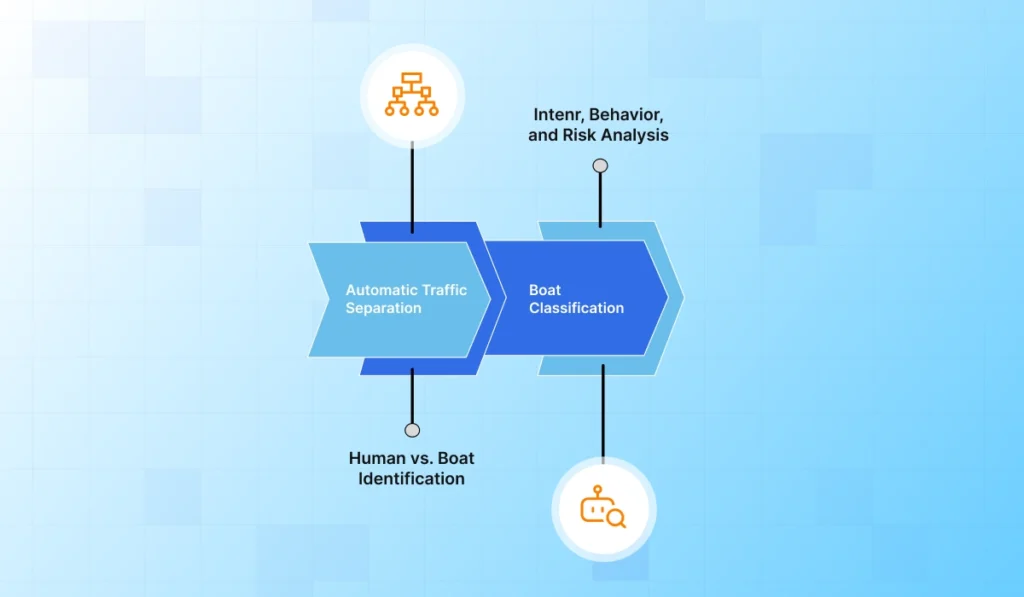

Discover and baseline bot activity

- Automatically separate human and automated traffic across every site, app, and API so you finally see where bots hit.

- Classify bots by intent, behavior, and risk level to distinguish good crawlers from scrapers, stuffers, and fraud bots.

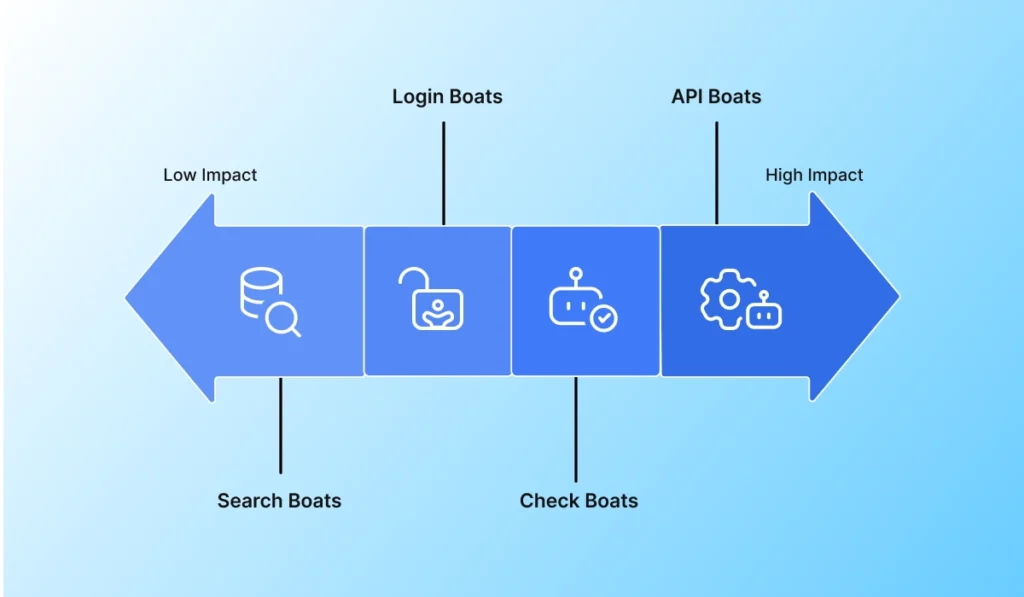

Assess and prioritize business risk

- Pinpoint high‑impact abuse on logins, checkout, search, and APIs where bots drain revenue or distort analytics.

- Score bot threats based on financial, performance, and security impact so teams know which attacks to fix first.

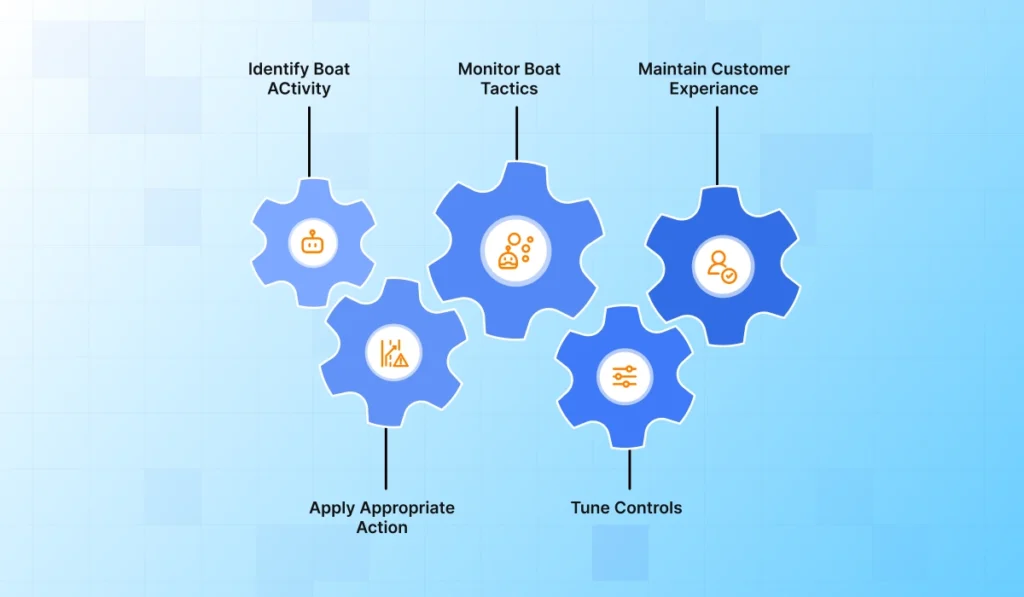

Block and adapt in real time

- Apply the right action per bot—rate limits, smart challenges, redirects, or hard blocks—without touching application code.

- Continuously tune controls to stop evolving bot tactics while keeping real customers fast, frictionless, and online.

- Healthcare

- Bot Mitigation

Deflecting 250 Million Application & API Attacks on Healthcare Systems with Prophaze WAAP

SecOps Chasing False Positives,

Prophaze Turns Bot Traffic into Actionable Intel

Prophaze Bot Protection is engineered for platforms where every release, campaign, or spike attracts scrapers, credential stuffers, and fraud bots—without giving DevSecOps more code to maintain. It slots into existing architectures and pipelines so teams get precise bot control at scale while keeping experiences fast for real users.

AI behavioral fingerprinting for human‑like bots.

Real‑time bot classification for critical flows.

API monitoring with traffic anomaly scoring.

No SDKs or rewrites, minimal latency.

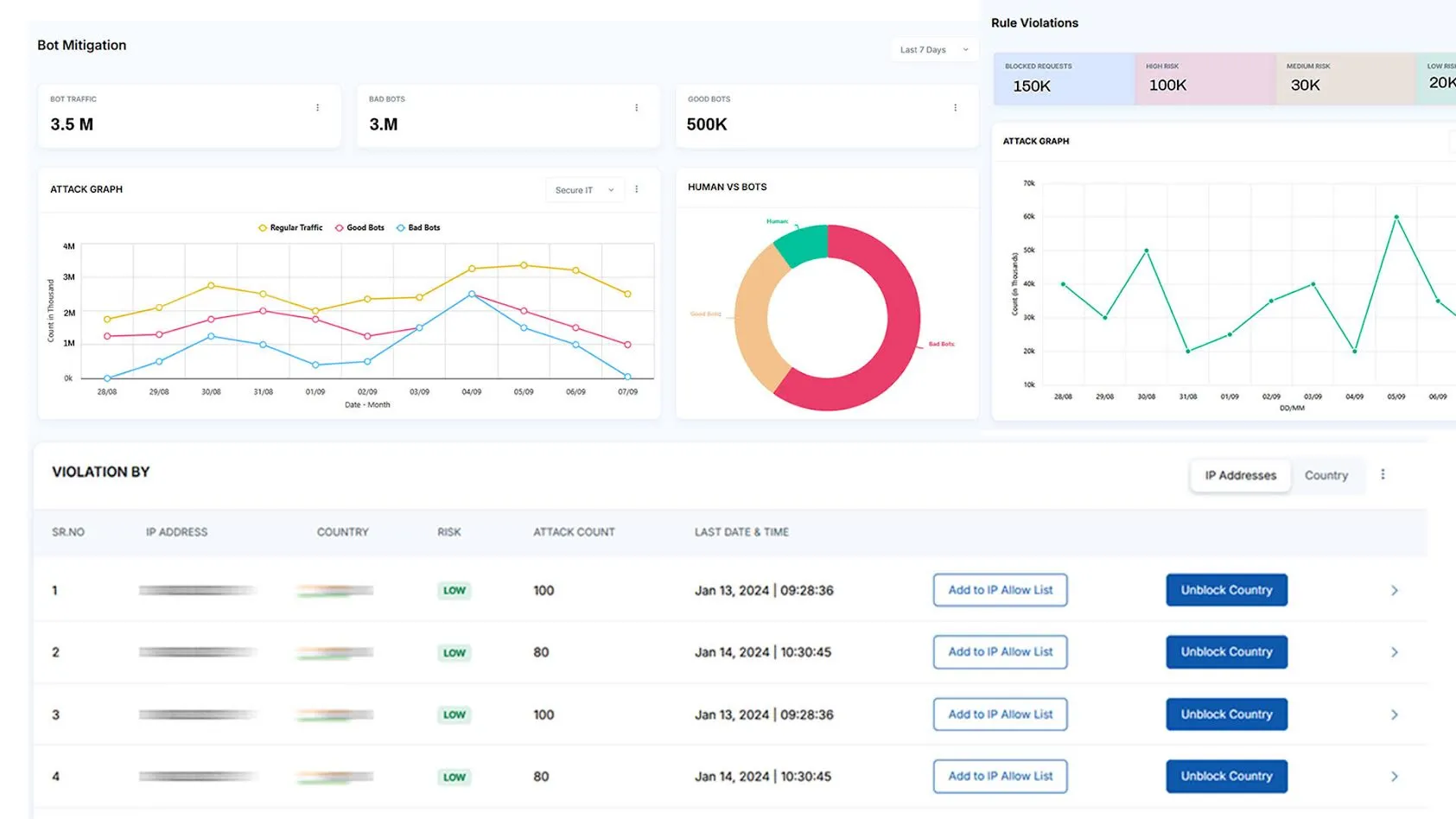

Bot Traffic Hard to Read, Prophaze Puts It All in One Dashboard

Visualize bot and human traffic with live attack maps by region, network, and IP reputation.

Use heatmaps to highlight where suspicious activity and attack rates are spiking.

Break down API traffic anomalies to see which endpoints and methods bots are targeting.

Track status codes, IPs, and user agents to separate good crawlers, bad bots, and real customers.

Export detailed logs and reports for audits, compliance reviews, and executive reporting.

Trusted by High-Risk, Revenue-Critical Platforms

Start Blocking Bad Bots Without Blocking Customers

- Stop bots before they impact revenue

- Protect websites, APIs, and cloud-native apps

- Deploy in minutes, no engineering effort